Advancing Quality Engineering with Synthetic Data and Data Privacy by Design

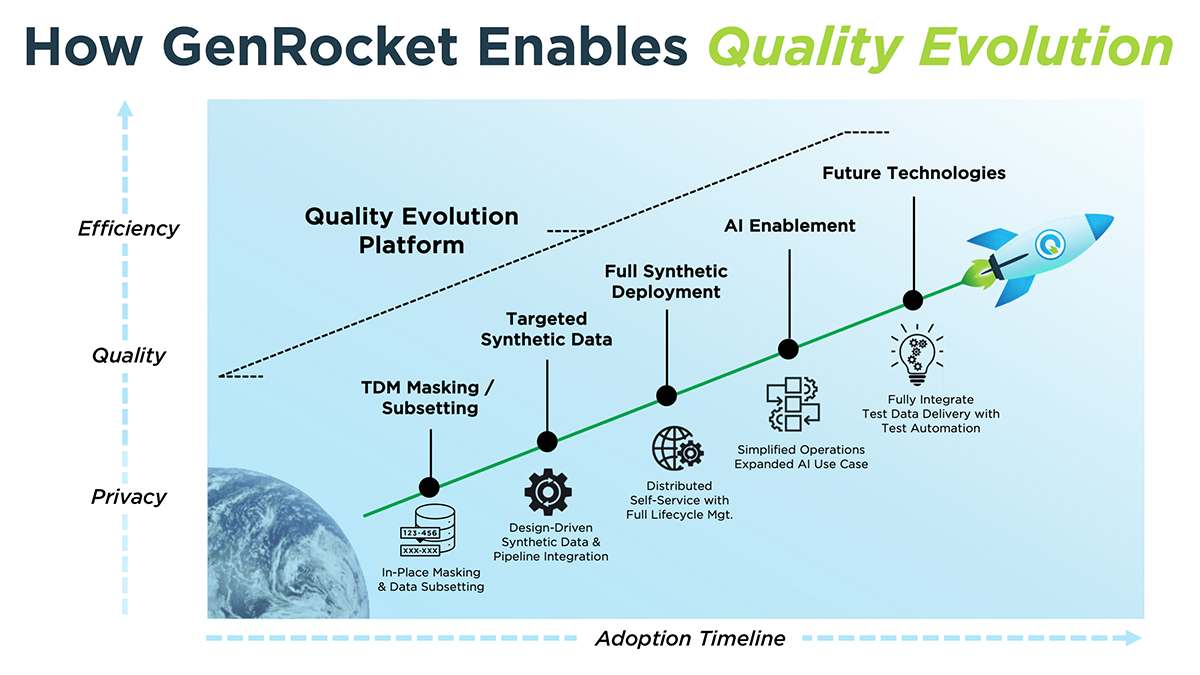

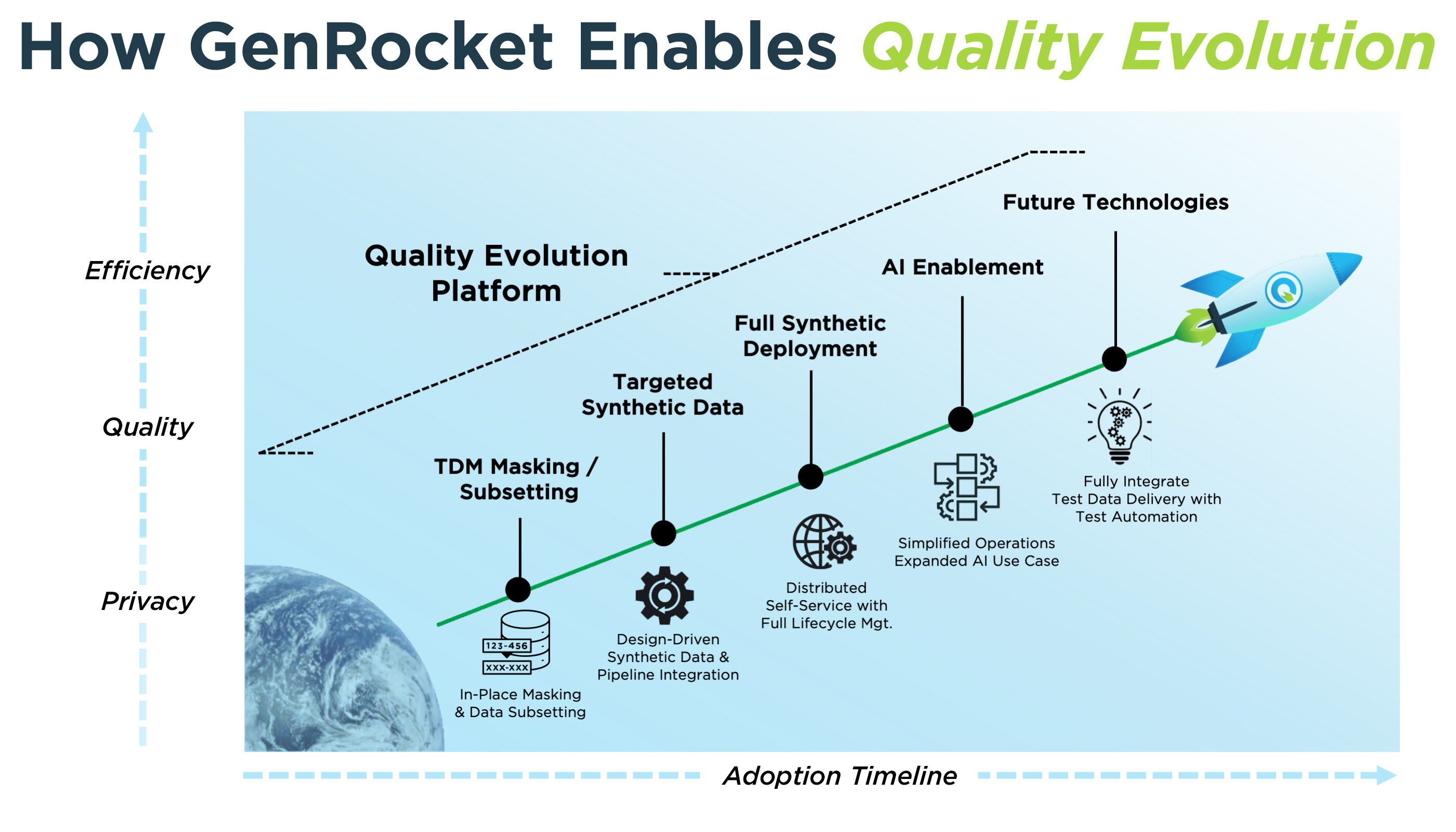

In the rapidly evolving landscape of software development and testing enterprises are facing unprecedented challenges around data quality, speed, and privacy. GenRocket’s Quality Evolution Platform (QEP) is the industry’s first integrated solution that bridges legacy test data management (TDM) with design-driven synthetic data and an AI-orchestrated future. QEP gives engineering teams complete control over test and training data, so they can increase coverage, protect privacy, and release faster—without waiting on production refreshes or exposing PII.

It’s Time to Focus on Quality, Efficiency and Privacy

As the pace of development accelerates and regulatory demands heighten, the traditional copy-and-mask approaches struggle to keep pace: they’re slow to refresh, expensive to store, and—because production data reflects yesterday’s scenarios—they miss unexpected use case scenarios.

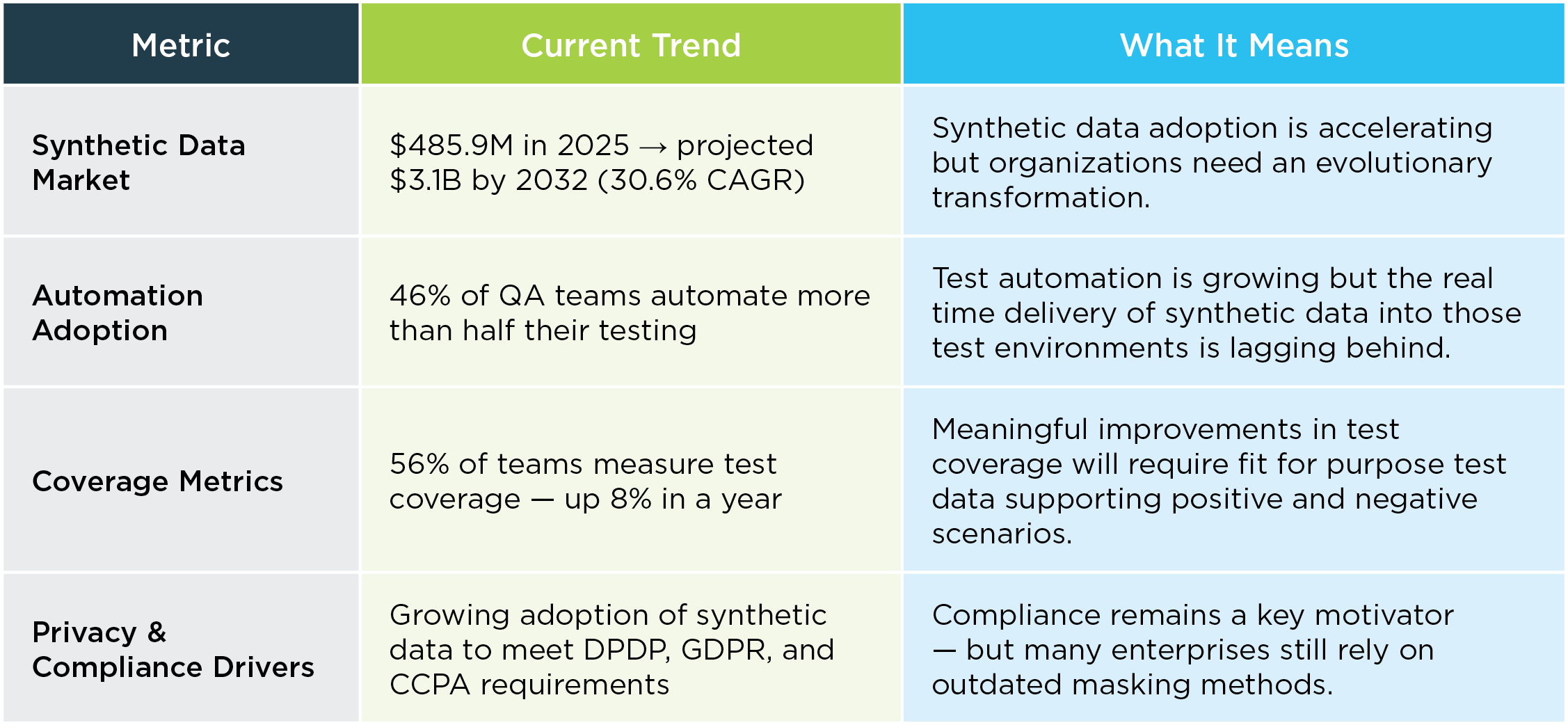

Copying production data—even with the use of masking algorithms to obfuscate sensitive data—increases cyber risk and hampers innovation. Increasingly enterprise quality engineering organizations are looking to synthetic data to eliminate risk and fill the inevitable data gaps present in production data sets. According to industry data, the global synthetic test data market is projected to reach USD 3.1 billion by 2032, driven by organizations seeking compliant solutions for privacy and faster testing cycles. (source: coherentmarketinsights)

Market signals are clear:

- Breaches are costlier: IBM’s 2024 study shows the global average cost of a breach climbed to $4.88M—a 10% jump year over year. Organizations using security, AI, and automation reduced breach costs by an average $2.2M, underscoring the ROI of automation and privacy-by-design practices across the SDLC. (cdn.table.media)

- Privacy rules are tightening: India’s Digital Personal Data Protection (DPDP) Act is moving toward enforcement via draft 2025 Rules released for consultation, with the government signaling formal rules by late September 2025. Global teams can’t rely on obfuscation alone; they must prevent sensitive data from leaving production. (Press Information Bureau)

- Quality is a board-level topic: The 2024–25 World Quality Report highlights that data quality and GenAI-driven automation are now central to modern QA and testing strategies. (Capgemini)

- DevOps needs dependable data: DORA’s 2024 research shows teams are rapidly adopting AI, but their performance still hinges on fundamentals like robust testing and stable pipelines—i.e., having the right data at the right time. (Google Cloud)

QEP is our answer: a pragmatic, step-by-step journey that enables a transition from production-data-dependent testing to a synthetic-first model—culminating in AI-orchestrated design and delivery.

Key Trends Driving Quality Evolution Platform:

- Enterprises want to remove production data from lower environments for compliance and safety.

- There’s unprecedented demand for automated, end-to-end synthetic data pipelines.

- Quality coverage matters: 56% of QA teams now measure themselves on how well tests actually cover business risk—not just quantity.

- Automation transforms speed: 46% of testing teams have replaced at least 50% of manual testing with automated approaches driven by AI and synthetic data.

Introducing Quality Evolution Platform: The Core Pillars

Quality

Enterprises now demand high-quality test data to ensure optimal coverage for both functional and non-functional testing processes. GenRocket’s design-driven synthetic data approach lets teams dynamically generate rich, fit-for-purpose data, maximizing test coverage.

- 56% of QA teams use “Test Coverage Metrics” as a primary measure of success, up from 48% two years ago—showing a clear focus on smarter testing rather than simply more testing. (practitest)

Efficiency

As software development fully embraces DevOps and CI/CD, the need for just-in-time, on-demand test data is critical.

- 46% of teams have automated at least half their test cases, a figure only set to rise. (testlio)

- The synthetic data software market itself is growing at over 30% CAGR, as companies look to boost efficiency in development and testing cycles. (360iresearch)

Pro Tip: GenRocket’s integrations with the DevOps ecosystem allow real-time data generation, reducing bottlenecks and enabling true agile innovation.

Privacy

Privacy is a non-negotiable foundation for modern testing. With expanding global privacy regulations, organizations are transitioning away from production data altogether. Synthetic data not only provides what’s needed for robust testing but eliminates sensitive data risks.

- Regulatory pressures and high-profile breaches have catapulted the shift to production data alternatives.

- Structured and unstructured synthetic data is rapidly growing in demand for privacy-centric applications with banking, financial services, insurance , healthcare, and retail leading the adoption.

Inside GenRocket’s Quality Evolution Platform

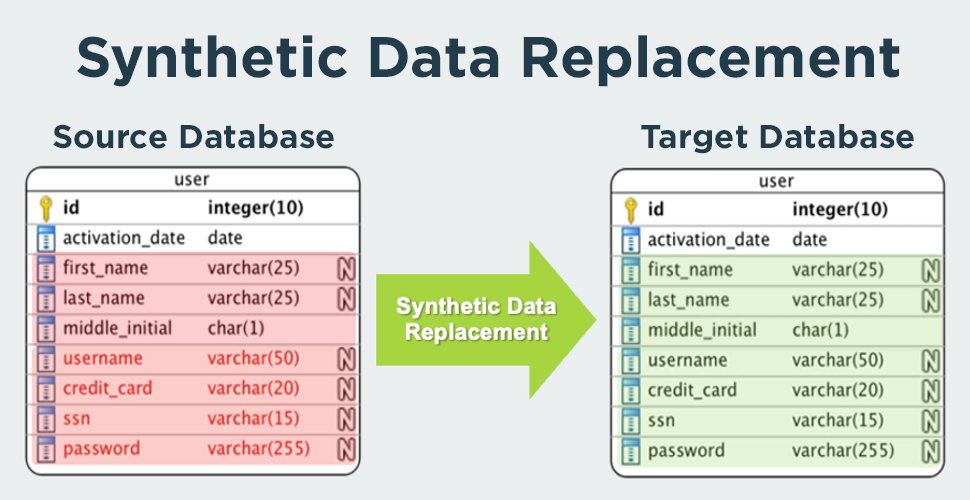

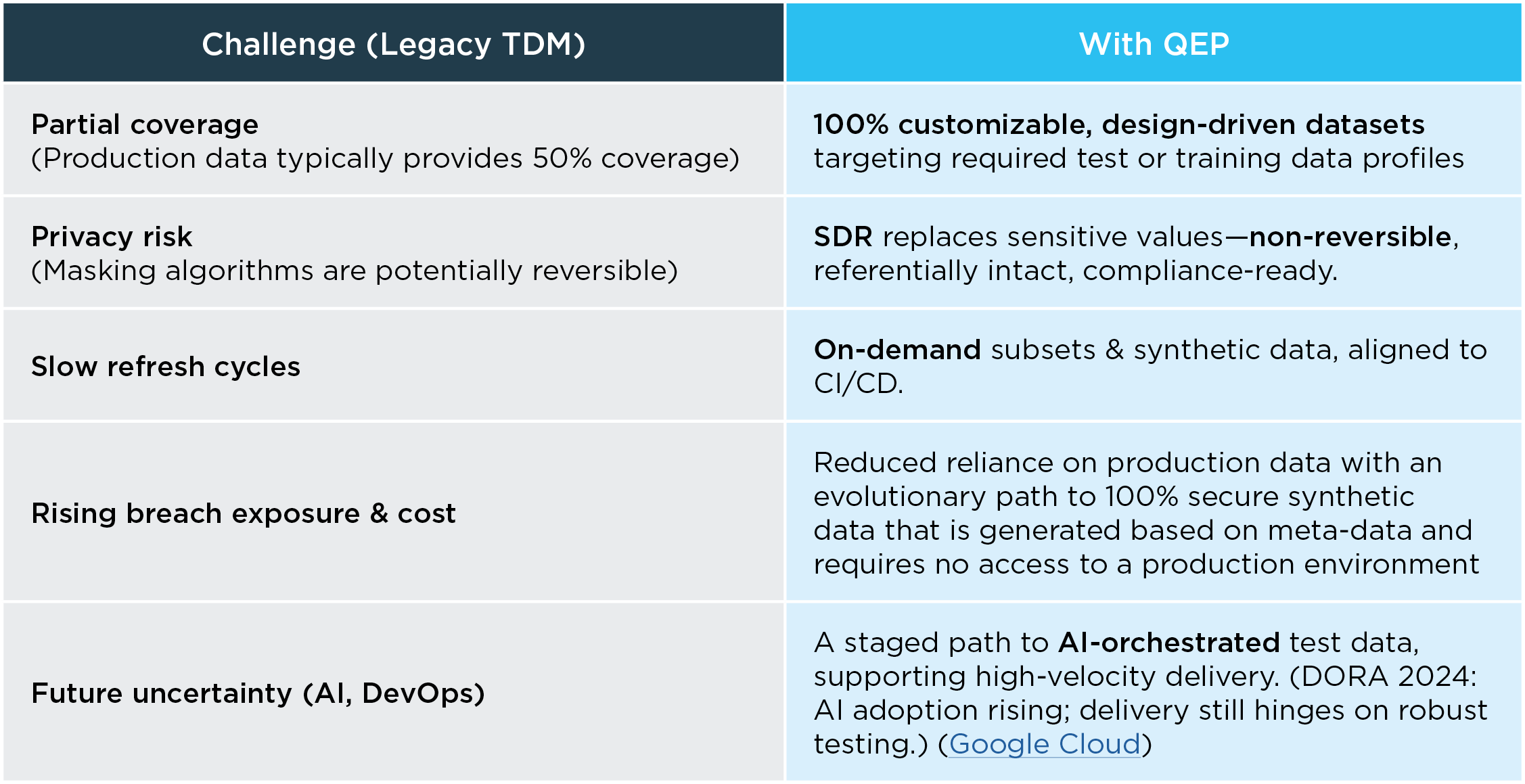

Synthetic Data Replacement (SDR)

We start by securing lower environments using Synthetic Data Replacement (SDR)—a patented process that replaces sensitive values with controlled, conditioned synthetic data. Unlike traditional masking methods, SDR ensures data cannot be reverse-engineered, is fully compliant with privacy laws, and maintains complete referential integrity across tables.

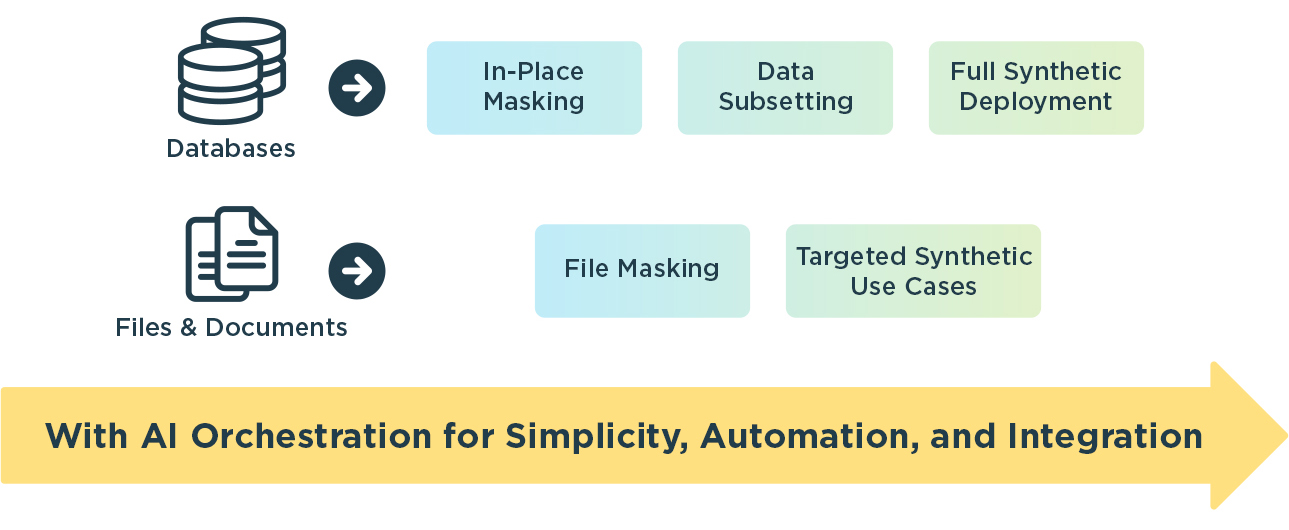

A TDM Bridge to the Future

The transformation to synthetic data is a critical process but comes with the challenge of new technology adoption and deployment across the organization. It’s unrealistic to expect this to happen overnight. That’s why GenRocket has created a TDM Bridge to the future. We’ve added the essential elements of traditional Test Data Management to the QEP platform enabling a graceful migration from production data to synthetic data.

In Place Masking

A customer undertaking a transition to synthetic data will often begin their journey with in-place masking. Using Synthetic Data Replacement (SDR), sensitive values are replaced in production database copies. The result is a gold copy: referentially intact, with non-reversible synthetic data values. Teams can mask millions of rows per minute via parallel processing and preserve integrity across related tables for Oracle, SQL Server, DB2, PostgreSQL, and MySQL

Intelligent Subsetting

From the gold copy, QEP produces fit-for-purpose subsets that align to test objectives, slashing storage costs and eliminating long refresh cycles. Reusable Test Data Projects deliver fresh data subsets on demand directly into CI/CD pipelines.

Masking for Subsets & Micro subsets

Here are the key features for GenRocket’s data subsetting capabilities:

- Reduce storage & accelerate provisioning

- Eliminate data reservation & refresh cycles

- Execute & reuse Test Data Projects

- High performance → 2.5 million rows/minute

- Supported databases include:

- Oracle

- MS SQL Server

- IBM DB2

- PostgreSQL

- MySQL

- Sybase

File Masking

QEP extends protection beyond databases to files—CSV, fixed-width, JSON, ORC, X12 EDI, and more—so you can safely test Guidewire, Workday, SAP, PeopleSoft, and NoSQL ecosystems without PII exposure.

Ensure Data Privacy for Any File Type

Here are the key features for GenRocket’s file masking capabilities:

- Selectively mask any column with SDR

- Support for COTS and NoSQL databases

- Database bulk load or import via API

- Supported File Types:

- Any delimited file (e.g., CSV)

- Any fixed file format (e.g., VSAM)

- X12 EDI

- JSON

- ORC (Hadoop)

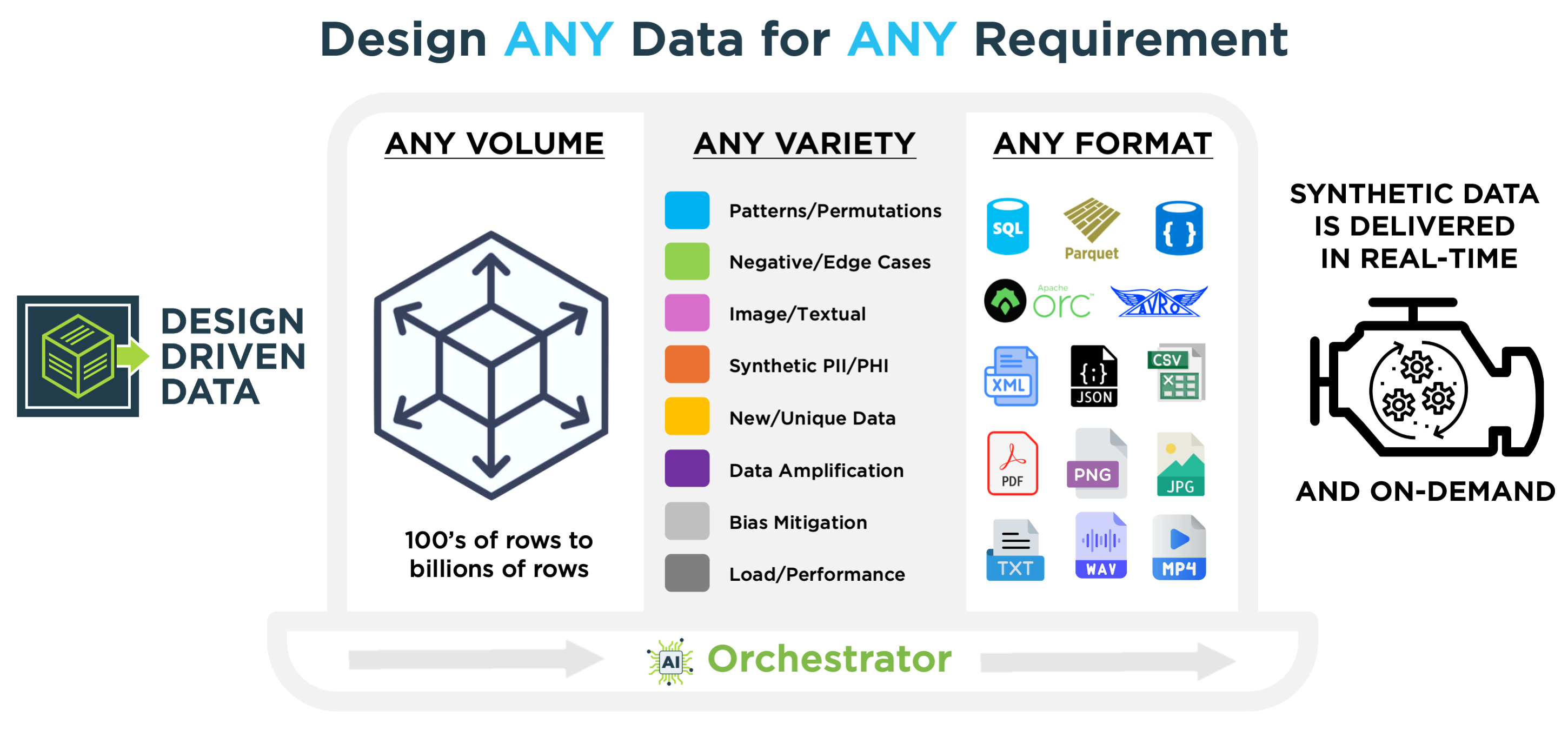

Design-Driven Synthetic Data

The centerpiece of QEP is Design-Driven Data generation. With 750+ intelligent generators and 110+ output formats, teams create deterministic, referentially correct synthetic datasets that cover every required combination and permutation—including negative values and rare edge cases that never appear in production. Result: full coverage by design, not by chance.

AI Orchestrator (The Next Evolution)

QEP’s next phase is the AI Orchestrator: a natural-language interface that converts intent (“Generate 5000 claim scenarios with boundary conditions and fraud patterns”) into executable Test Data Cases and orchestrates delivery into your test frameworks. Choose from guided deterministic designs or AI-assisted patterns while maintaining enterprise security and control over data quality.

- Novice users will be guided by a deterministic algorithm to design data

- G-Storyboard will translate natural language requirements into G-Cases

- Intermediate and Advanced users will use a fully conversational approach

- AI can optionally be turned off to meet customer security requirements around the internal use of AI

From Legacy TDM to Synthetic-First: What Changes with QEP

And the momentum isn’t just due to regulatory pressures. Analysts forecast that by 2030, synthetic data will overshadow real data used in AI model training—reflecting a broader industry shift to privacy-safe, scalable data practices that also benefit testing. (TechRadar)

Quality Evolution has Only Just Begun

The benefits of synthetic data transformation are validated by recent market research. The data tells a clear story — progress has been made, but the industry still has a long way to go. Test data management is evolving rapidly, yet the gap between intent and execution remains wide.

The Roadmap for Synthetic Data Transformation

- Secure what you have: Establish SDR-based in-place masking to create gold database copies for lower environments;.

- Provision-focused data: Deliver intelligent subsets for each unique testing objective; standardize “fresh data on demand.”

- Design for coverage: Fill data gaps with design-driven synthetic data tied to test case objectives (edge cases, negative paths, permutations, etc.).

- Scale with AI: Use the AI Orchestrator to translate intent to data designs and orchestrate test cases & test data cases in lockstep.

This staged adoption yields quick wins while building toward automation at scale.

QEP isn’t just another tool; it’s a roadmap for modern quality engineering—grounded in privacy, coverage, and automation. Start with secure in-place masking. Add intelligent subsetting. Design synthetic data for full coverage. Then let AI help you orchestrate it all.